Our clients often request AI assistants to automate internal business processes, such as:

- supporting users and partners,

- assisting sales teams (e.g., creating commercial offers or handling client communications),

- answering frequently asked questions.

To build these solutions, we use a platform based on open-source components:

- RASA (https://rasa.com/)

- Botfront (a visual interface for RASA)

- Chatwoot (an operator interface, https://www.chatwoot.com/)

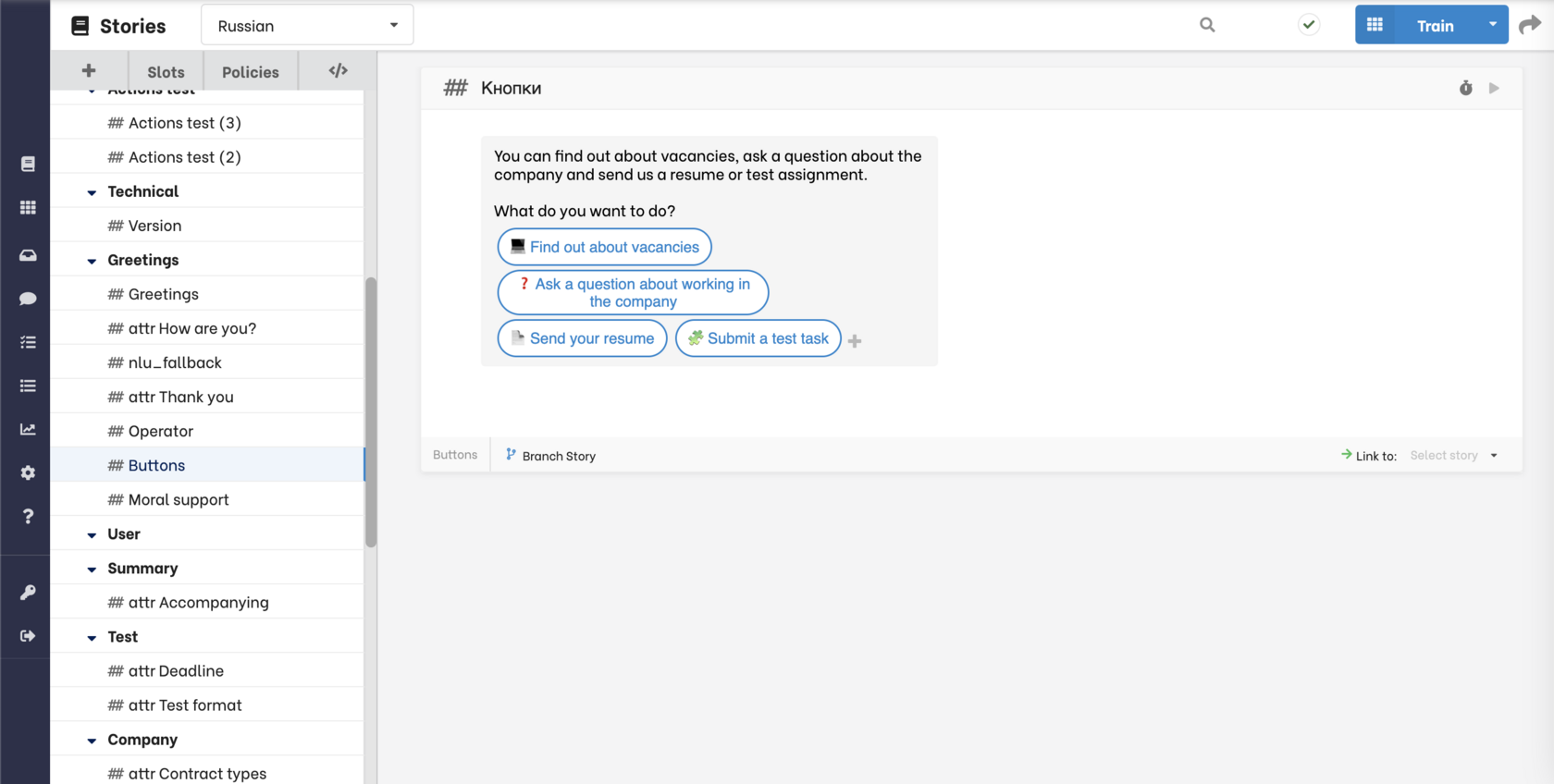

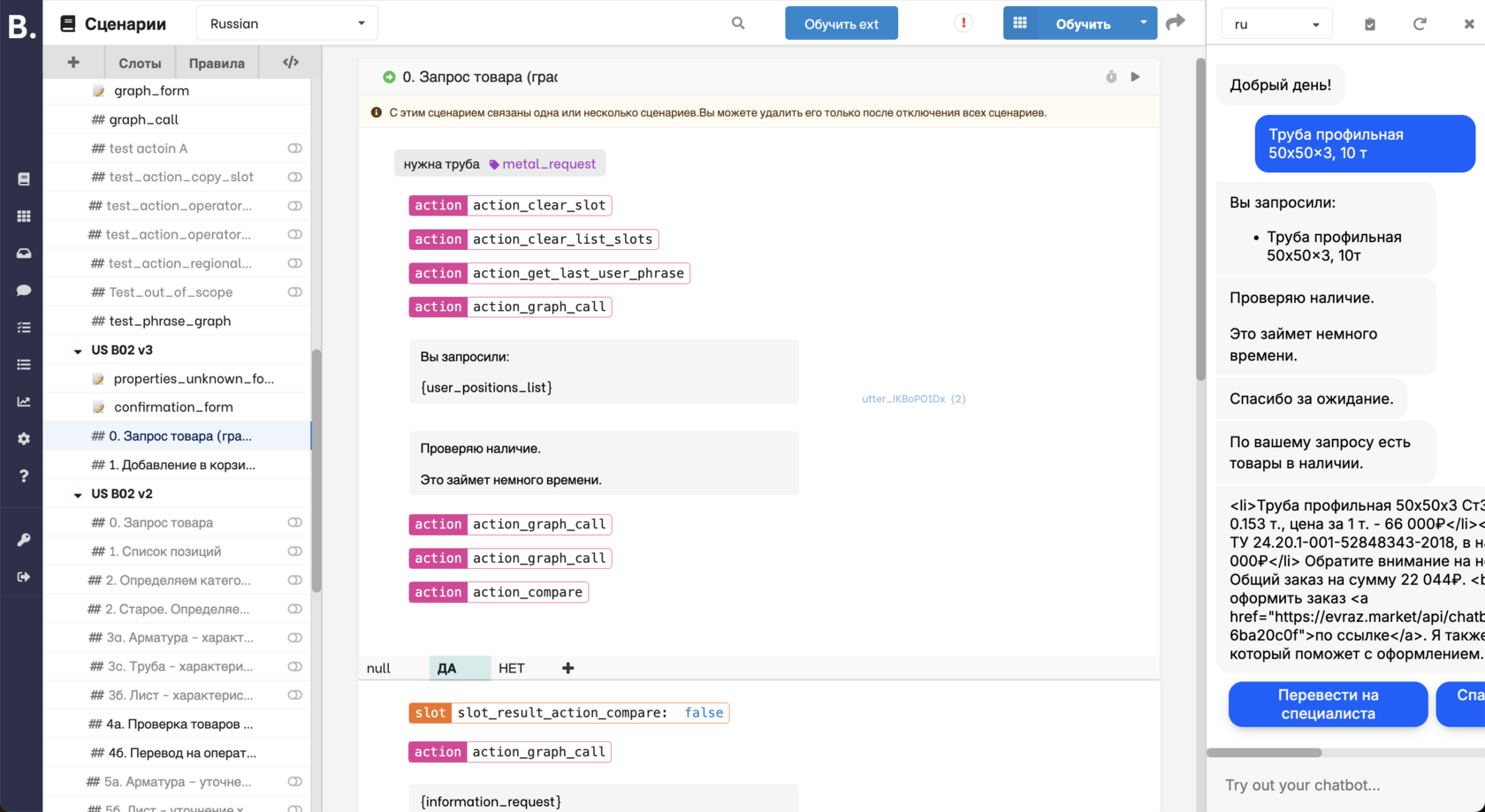

Botfront allows designers to create complex dialogue scenarios without coding, except when integrating with APIs.

The platform can be deployed in the cloud or within the client’s infrastructure to meet security requirements.

AI Assistant Capabilities

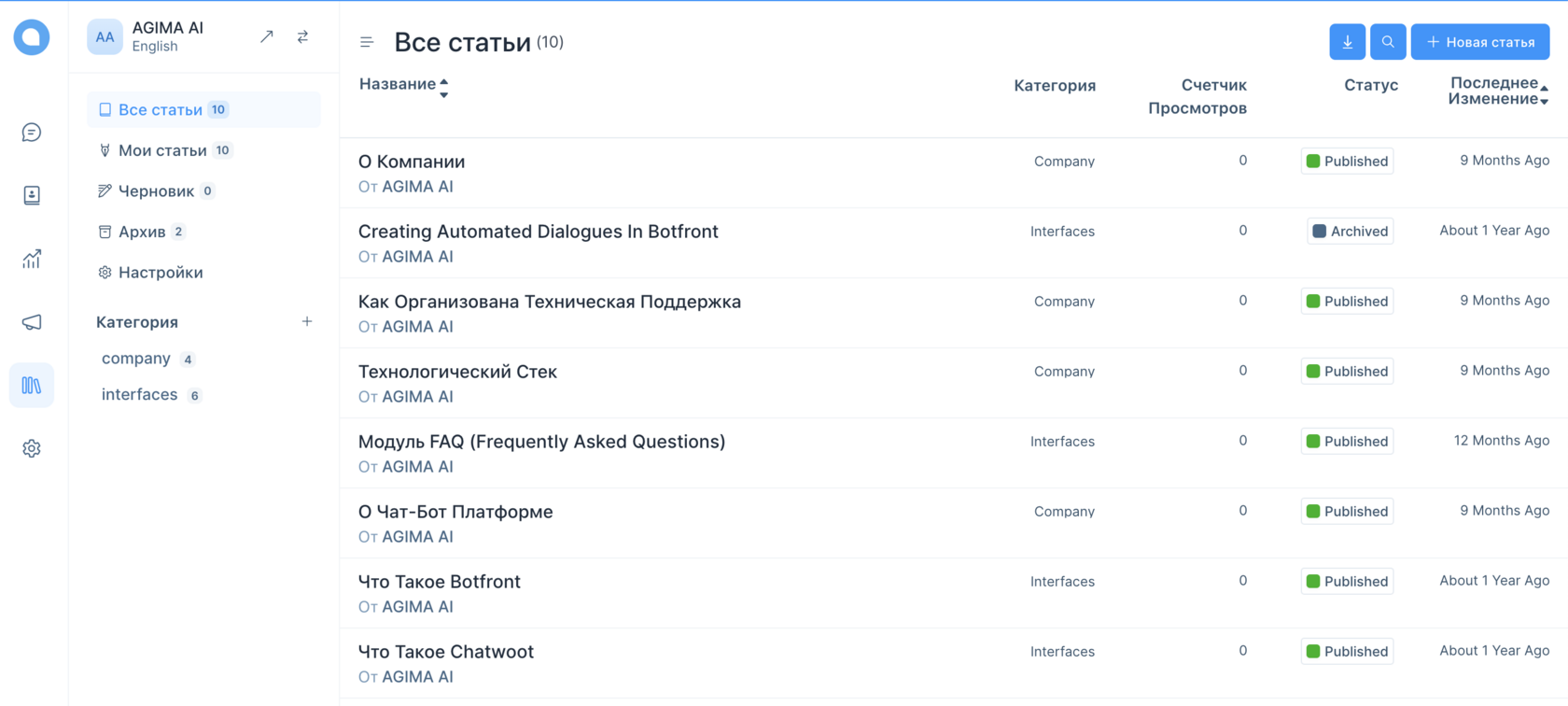

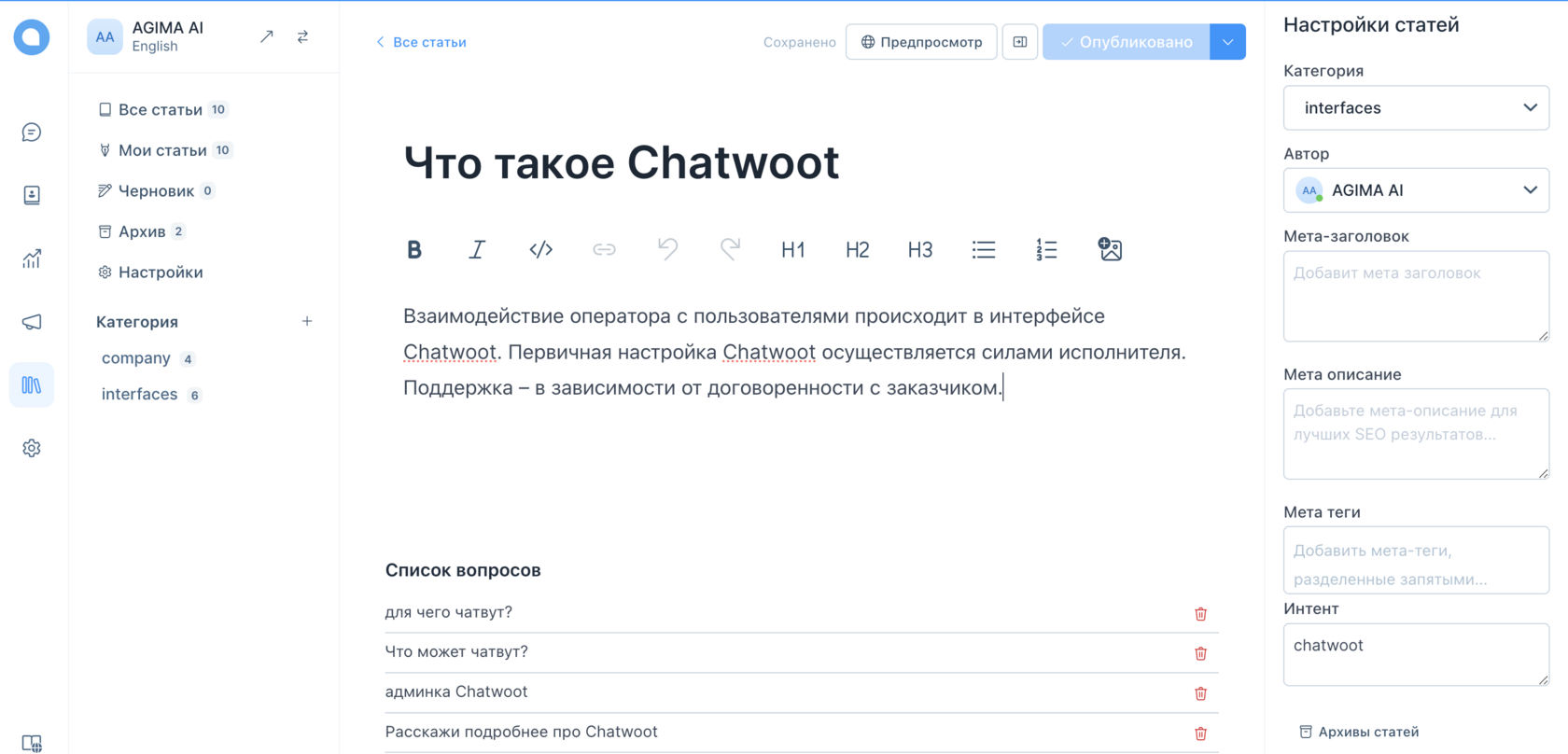

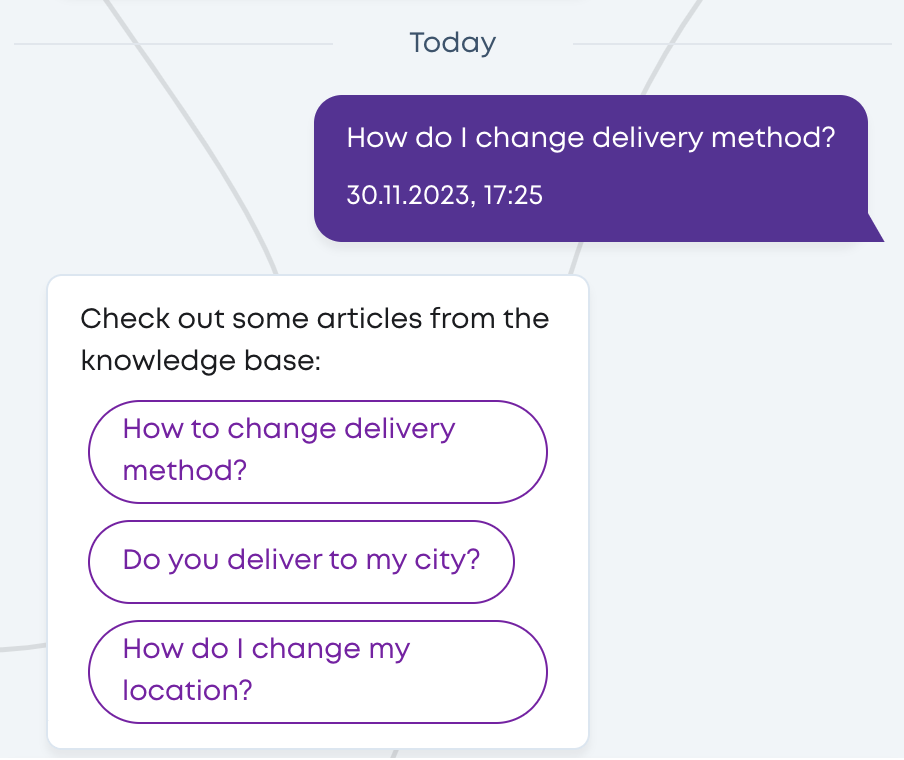

- Answering Frequently Asked Questions

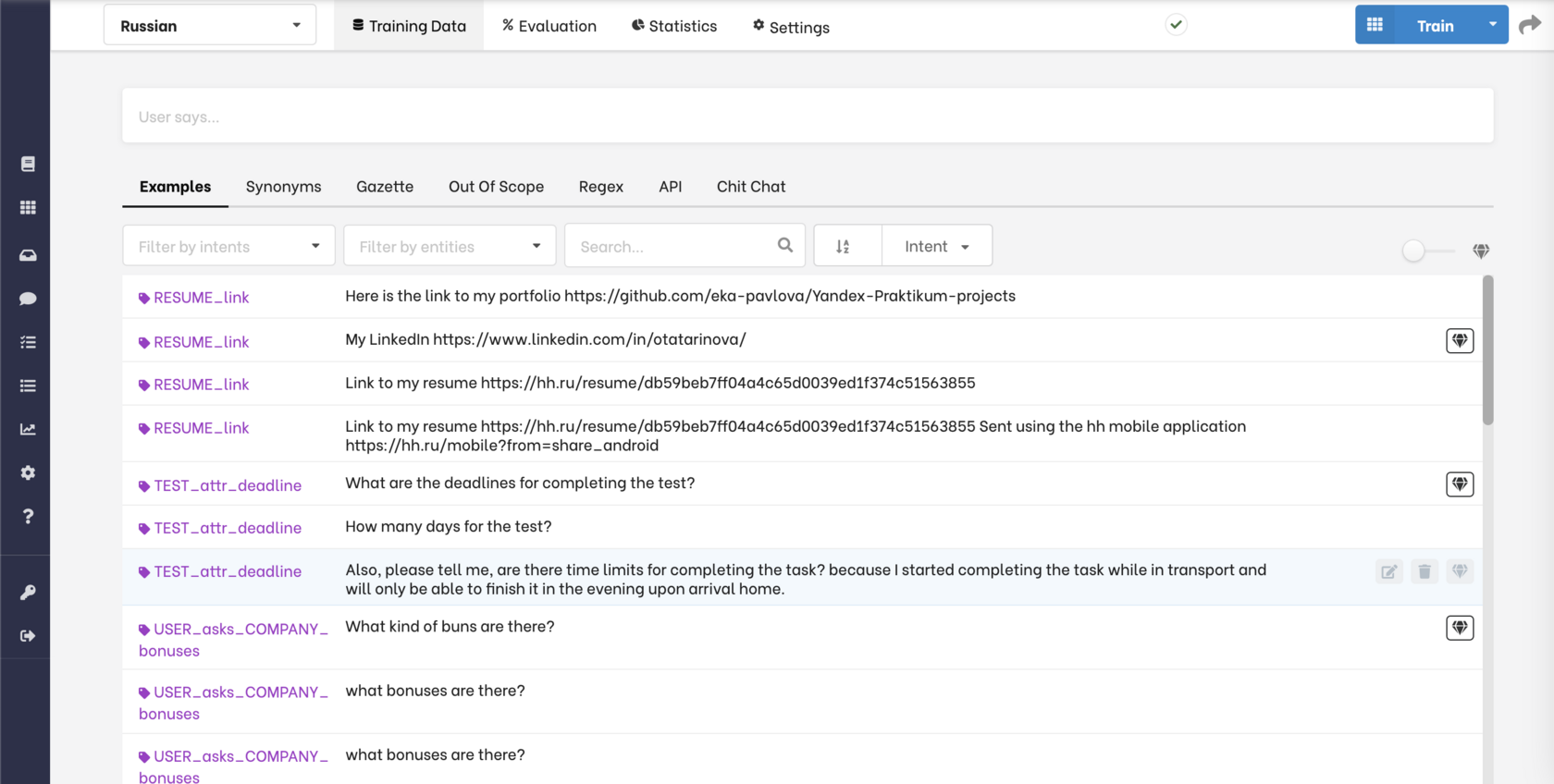

AI assistants respond to questions using information from a knowledge base. Content managers can easily add articles and related questions. When a user submits a query, the assistant searches for relevant articles and provides answers in the chat.

We often use Chatwoot for operator interfaces and knowledge base management.

AI assistants can also use large language models (like GPT) to generate responses or analyze user data.

- Automating Complex Scenarios

Our chatbots handle complex scenarios such as managing subscriptions or processing payments. These scenarios are built in the Botfront visual interface, which reduces the need for coding.

The platform is based on "stories" — sequences of intents and responses.

A story is a dialogue fragment that starts with a user's intent, followed by a series of user and bot responses.

To create a dialogue, we need to:

- identify key user intents,

- write story fragments that respond to these intents.

When a user interacts with an AI assistant, RASA identifies the intent behind each message and decides the next response. This response can be:

- an answer from the knowledge base (FAQ),

- a reply based on an existing story (e.g., a "check order status" story),

- a request to an API with the response returned from the API, or other custom actions.

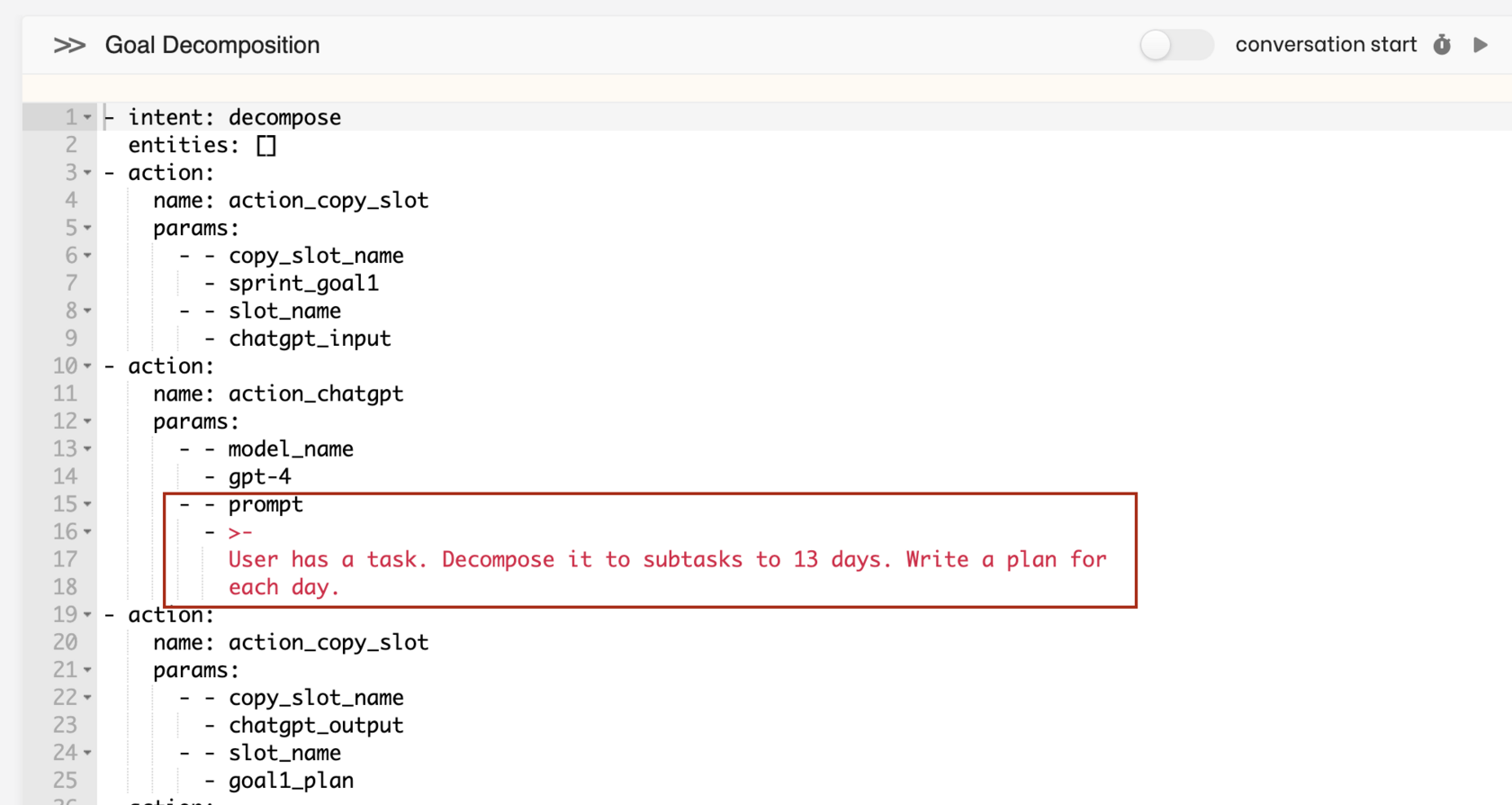

3. Integration with Large Language Models (GPT, Claude, and Others)

For more complex dialogues, we use large language models. They help to:

- generate responses based on the knowledge base,

- extract data from user messages (e.g., names or birth dates),

- temporarily take over the conversation to collect information or handle non-standard requests.

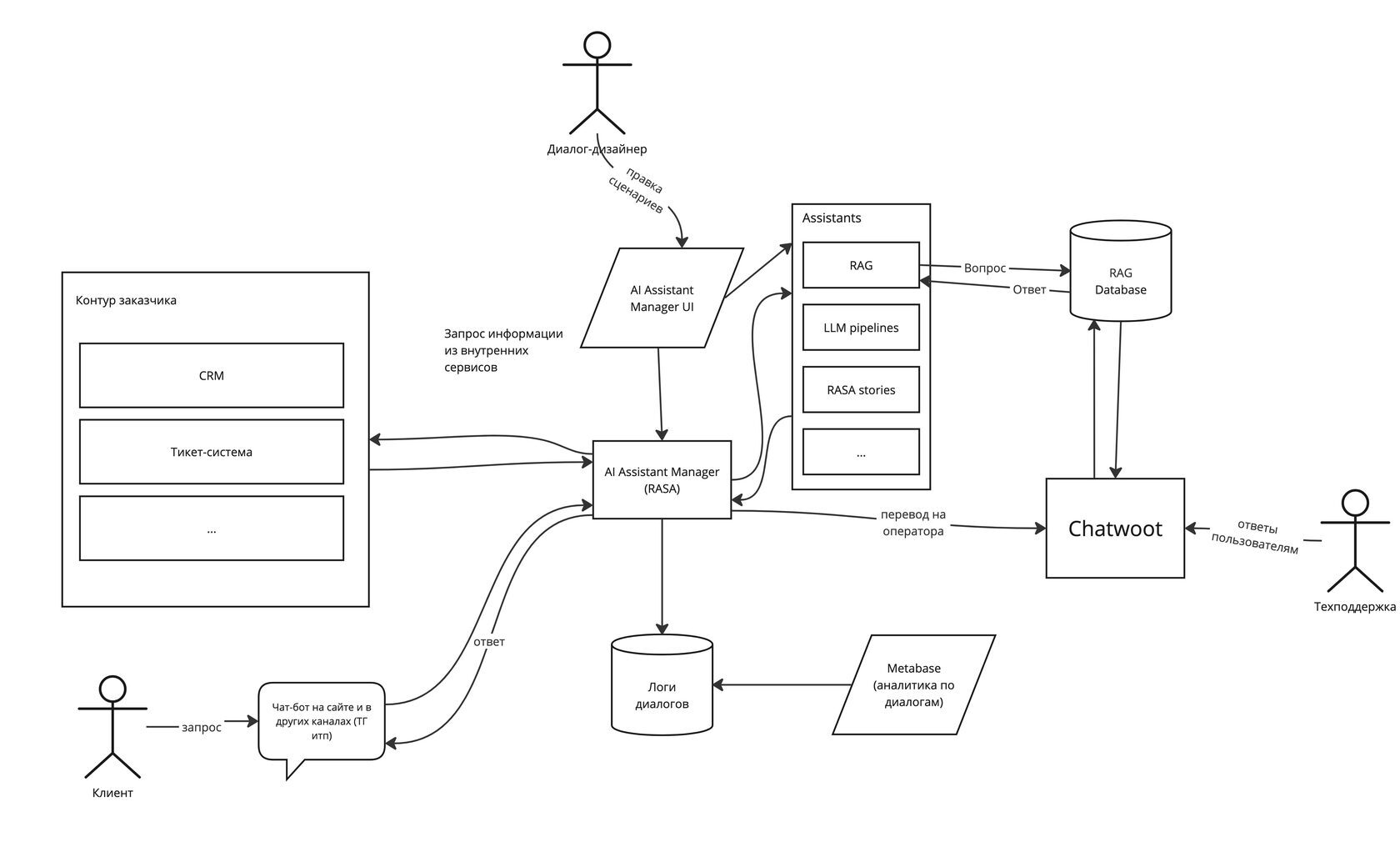

System Architecture

The general architecture of the system looks like this:

System Components

AI Assistant Manager

At the core of the platform is the AI Assistant Manager, which:

- receives user requests,

- determines which agent (e.g., RAG, LLM Pipelines) should handle the request,

- returns the result to the user.

We use RASA for intent recognition and scenario management. Stories in RASA can trigger other assistants.

Assistants

Assistants are AI agents available within the system, each performing a specific task. Common types include:

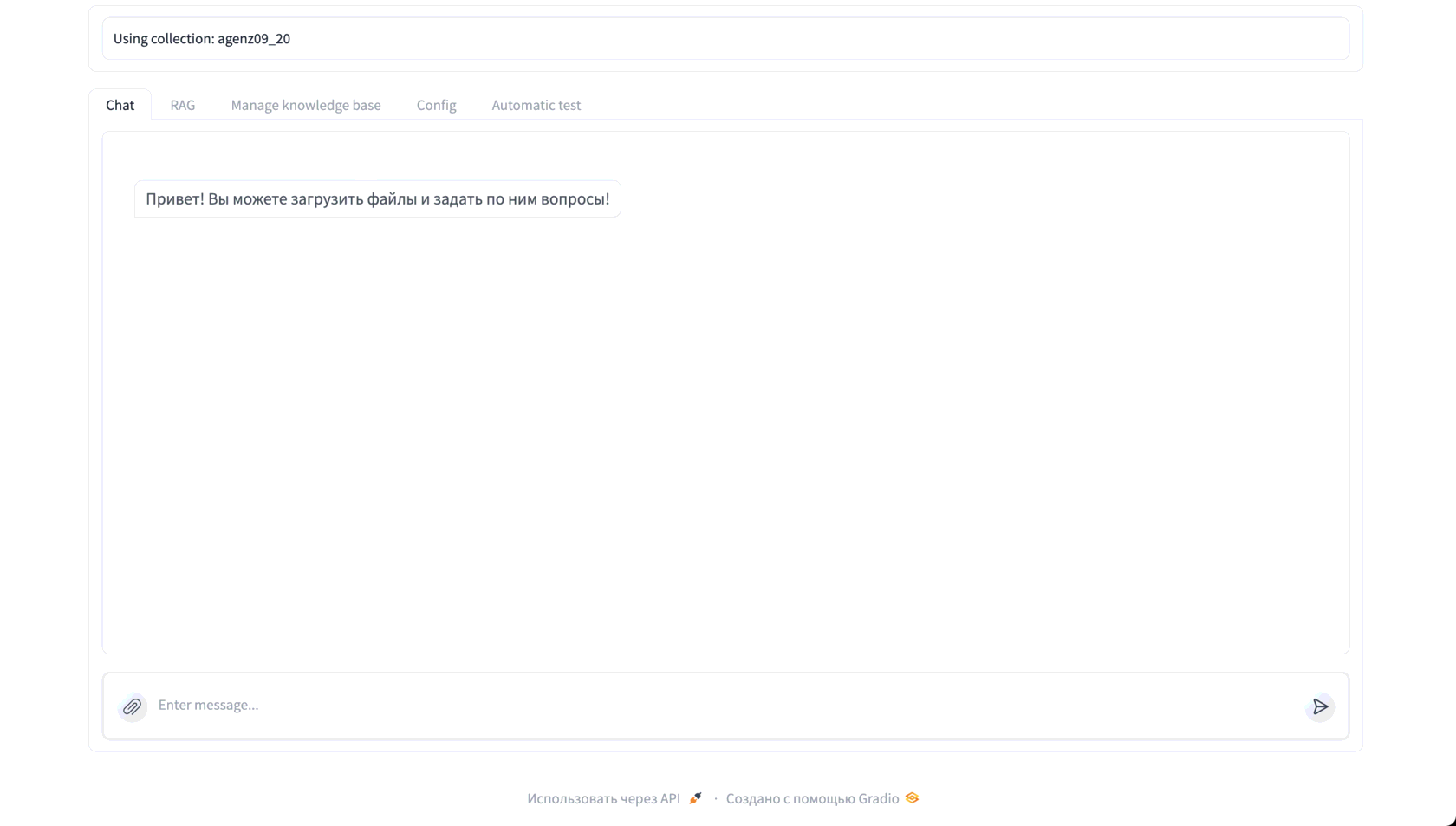

- RAG (Retrieval-Augmented Generation): Answers questions based on an uploaded document database. It works with a vector database, allowing document uploads and answering queries based on that data.

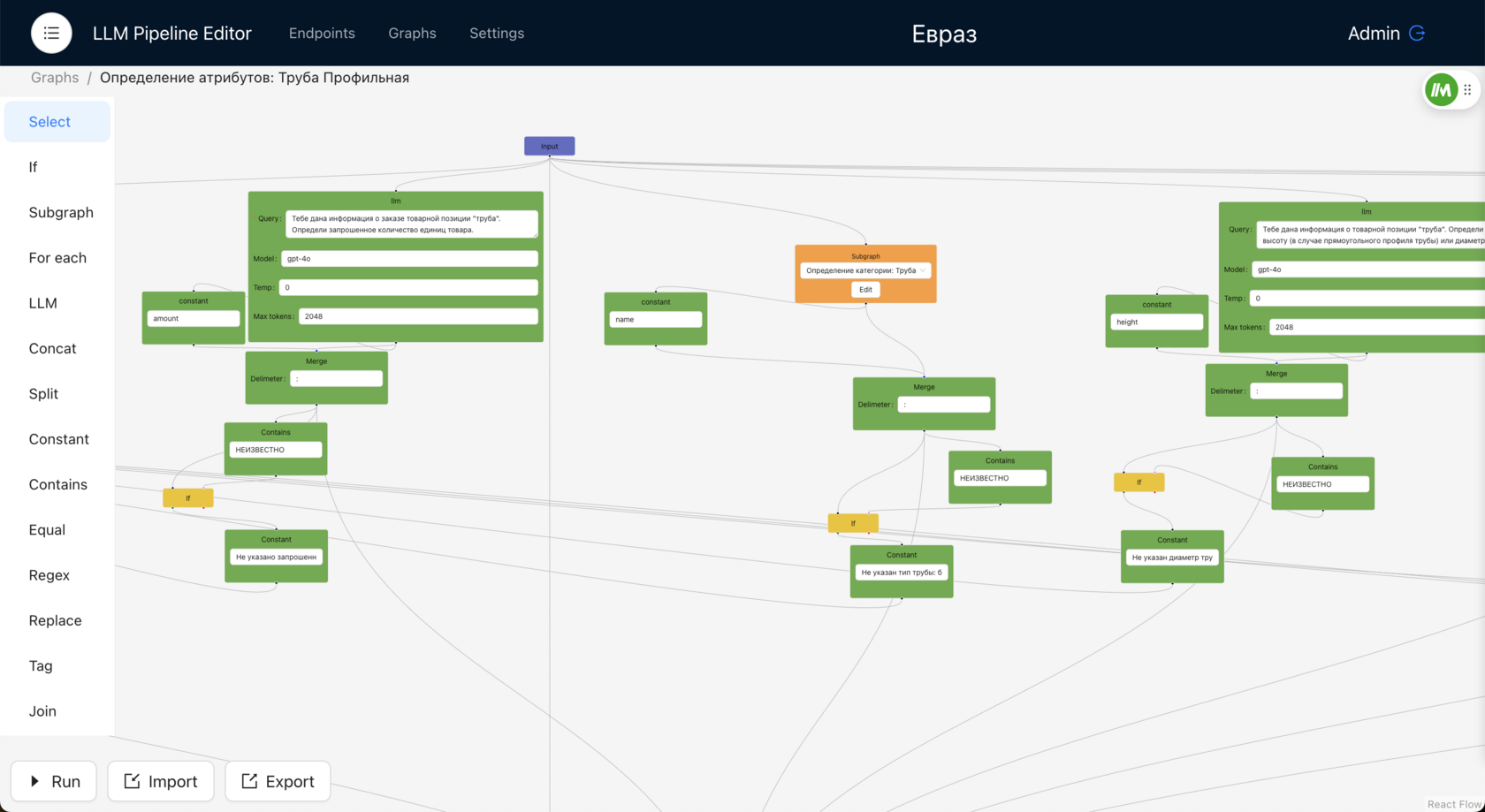

- LLM Pipelines: Handle complex user requests using chains of LLMs (e.g., generating content plans and post drafts, extracting data from CRM comments, etc.).

- RASA Stories: Manage scripted chatbot-user interactions with calls to internal client services. For example, submitting a bug report, creating a ticket in JIRA, and returning the ticket number to the user.

The list of agents can be easily expanded.

How It Works

AI Assistant Manager UI

An interface for creating dialogue scenarios. Currently, the system includes several interfaces:

- Botfront: For creating and configuring dialogue scenarios.

- LPE (LLM Pipeline Editor): For designing complex request-processing pipelines.

- RAG: For managing the knowledge base, uploading documents, and testing responses.

User Roles

Client: Interacts with the chatbot via a convenient interface (e.g., a messenger or website).

Dialogue Designer: Works in the interface to configure scenarios and assistant behavior for business needs.

Operator (optional): Uses Chatwoot to take over conversations when a user requests to speak with a human.

Data Flow and Component Interaction

The user sends a message to the chat.

AI Assistant Manager receives the message and determines which assistant should process it:

- For questions, it requests data from RAG.

- For complex user requests, it calls the LLM Pipelines assistant.

- And so on.

The response from AI Assistant Manager is sent back to the user in the chat.

This architecture allows flexible development of AI assistants, adding new scenarios, and fixing issues with minimal involvement from developers.