The Task

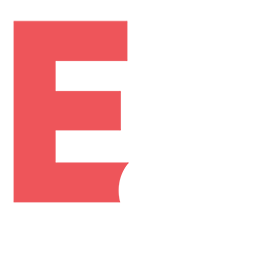

- Develop an application similar to Shazam, but designed for videos. The app identifies the name of a film and provides a list of featured items from the video.

For example, the film Mr. and Mrs. Smith is playing on TV. The user opens the app and point their camera at the screen. The system will display the movie title and a detailed list of items: the characters' clothing, accessories, car brands, and other products uploaded to the database by the rights holder.

DEVELOPMENT

Monitor Recignition

The first step is to train the application to detect a monitor that is in front of it. For this purpose, we collected and labelled images that show monitors switched on.

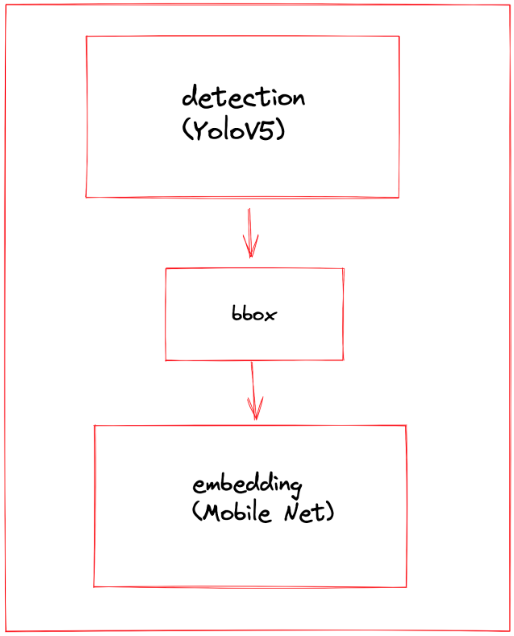

Turning a frame into a numeric vector - embedding

We trained an embedder that converts information from a frame into a numeric vector. The vector space is organised so that similar frames are close to each other and different frames are far away.

Working with Database

To find the very film that the user is shooting, we need a database of films. We took 150 videos, split them into frames and digitised each frame with an embedder. The resulting vectors were loaded into Milvus, a database that provides a quick search for similar vectors.

Creating Item List

We created and filled up a cloud database containing all the information about each video, its segments and the products that appear in the footage.

«This project is unique in terms of machine learning. It was important not only to make a solution with high recognition quality, but also to optimise it for real-time execution on a mobile device. Together with the Amiga team, we ported the recognition pipeline to Dart and got a solution that works perfectly in real time on a wide range of mobile devices on both iOS and Android».

– Andrei Tatarinov, CEO of Epoch8.

HOW VIDEO RECOGNITION WORKS

The application compares the frames taken by the user with frames from the Milvus database. This database is pre-filled with storyboards of 150 films, converting each frame into a numeric vector.

Numerical vector frames from a user's video are matched with vector frames from the Milvus database. Through majority voting, the most similar video from the database and a specific segment of that video are determined.

Numerical vector frames from a user's video are matched with vector frames from the Milvus database. Through majority voting, the most similar video from the database and a specific segment of that video are determined.

AUTOMATIC UPDATE OF THE VIDEO DATABASE

To add a new video to the database, you need to update the data in Milvus. This process is automated by our own service - Datapipe. We developed it at Epoch8 to automate data preparation and training of ML models.

We have already successfully used Datapipe in two other projects: for optical character recognition on a cashback platform and for ML model creation in the Brickit app, which helps to assemble new designs from old parts.

We have already successfully used Datapipe in two other projects: for optical character recognition on a cashback platform and for ML model creation in the Brickit app, which helps to assemble new designs from old parts.

«The service tracks changes made in the cloud database. If a new video appears in the database - the name and link to the file - the service automatically downloads it, crops it, assigns numeric vectors and uploads the vectors to Milvus. The user will then be able to find that video when using the app».

– Anna Zakutnyaya, ML-engineer.

HOW PRODUCT LISTS ARE CREATED

When the desired video segment is found in the Milvus database, we extract all related information - film descriptions and products - from tables hosted in a cloud service. This service allows you to create tables and then search them via API.

It goes like this:

1. The application defines a segment of the film.

2. The application provides the user with:

1. The application defines a segment of the film.

2. The application provides the user with:

- information about the film;

- a list of products available in the segment.

The lists of goods are formed and updated by copyright holders, who add descriptions and photos independently.

Technology

- YOLOv5 - a detector.

- MobileNet - embedder - trained on pictures using ArcFace layer.

- Milvus - a vector database.

- Grist - tables with video and product information.

- Datapipe - ML service used to update Milvus database.

- Flutter - a framework for mobile application.

RESULTS

- We have developed an ML model capable of recognising 150 films and goods in the frame.

- The film database is automatically expanded.

- User videos are processed at a rate of 1 frame every 200 ms in real time.

- The video recognition accuracy is 96%.

- Film and product identification takes only 2.4 seconds in real time.

The Team

- Andrei Tatarinov, CEO.

- Anna Zakutnyaya, ML-engineer.

- Alexandr Kozlov, ML-teamlead.